Win The Data War: How normal people can survive and thrive in the data abundance era

- Jeff Hulett

- Nov 29, 2023

- 83 min read

Updated: Sep 18, 2025

There is a significant gap between the average person's statistical understanding and the practice of data science. Reflecting this, the tech industry—which employs the majority of data scientists—has adopted a term for its customers borrowed from drug culture: it refers to them as "users." As Sean Parker, former President of Facebook, admitted, “We designed it to be addictive. Social media exploits a vulnerability in human psychology… God only knows what it’s doing to our children’s brains.” This deliberate exploitation of human tendencies positions tech companies as "dealers" or "pushers," delivering digital dopamine hits. They meticulously design the "user interface" and optimize the "user experience" to maximize "user engagement." This strategy parallels that of tobacco companies, which also refer to their customers as "users." While tech companies deliver data through apps and smartphones, tobacco companies rely on cigarettes to deliver nicotine—distinct products, yet remarkably similar dopamine-triggering strategies.

The challenge, however, is that data, unlike nicotine, is unavoidable. Data underpins how our brains process the world and make decisions. Our brains naturally attend to data as a core survival mechanism. Unfortunately, the statistics taught in schools often fail to equip individuals with the tools they need to harness data effectively. This article aims to bridge that gap. By crossing this bridge, you’ll gain decision confidence through practical and intuitive statistical understanding. This confidence is further fortified by tools and insights from data science and business practices. Along the way, you’ll discover resources to help you delve deeper and turn the language of data into your Information Age superpower!

Having been on the dealer's side, I now aim to empower users to take control—equipping them with data and the confidence to make informed decisions.

We will explore gaining knowledge from data by harnessing the power of statistics. Statistics is the language of data. A statistical language starting point is provided by building upon the time-tested statistical moments framework. It shows why learning the world through the data lens is helpful and increasingly necessary.

Just like grammar rules for language, statistical moments are essential for understanding our data-informed past as a guide for navigating the future. As those statistical grammar rules become routine, you will effectively understand the data defining our world. This understanding grows to be a permanent feature guiding your success. Data, as representing our past reality, contains nuance, exceptions, and uncertainties adding context to that historical understanding. The statistical moments framework helps unlock the power of our data.

We begin by making the case for data and why learning the language of data is important. Tools, called 'personal algorithms,' are introduced to help you transform your data. Then, we will jump into the major statistical moments' building blocks. They serve as the article's table of contents. Intuitively understanding these moments provides the grammar and a path to understanding your past reality. The path includes an approach to identify and manage inevitable uncertainties and potential ignorance. Context-strengthening examples and historical figures are provided from science, personal finance, business, and politics.

The Data Explorer's Journey Map

Introduction: The case for data and the data bridge

Don't Be a Blockhead → 0th moment: unity

Our Central Attraction → 1st moment: the expected value

Diversity by Degree → 2nd moment: the variance

The Pull of the Outliers → 3rd moment: the skewness

The Tale of Tails → 4th moment: the kurtosis

Fooling Ourselves → a moment of ignorance

Next Steps → Better Living by Being Bayesian and Practicing Pareto

Conclusion, appendix, and notes

Please follow the links for more formal definitions of the statistical moments. Knowledge of statistics is helpful but not necessary to appreciate this article. For a nice descriptive, probability, and inferential statistics primer, please see this link. Thanks to Liberty Munson, Director of Psychometrics at Microsoft, for providing the excellent primer.

About the author: Jeff Hulett is a career banker, data scientist, behavioral economist, and choice architect. Jeff has held banking and consulting leadership roles at Wells Fargo, Citibank, KPMG, and IBM. Today, Jeff is an executive with the Definitive Companies. He teaches personal finance at James Madison University and leads Personal Finance Reimagined - a personal finance and decision-making organization. Check out his latest book -- Making Choices, Making Money: Your Guide to Making Confident Financial Decisions -- at jeffhulett.com.

1. Introduction: The case for data and the data bridge

Data and algorithms are different.

I offer this disclaimer because data and algorithms are often confused. Data represents our past reality. Algorithms transform data. They are different. Data has already happened. An algorithm is a tool to transform data intended to predict and impact the future. An organization’s data-transforming algorithm may be helpful to you - especially when your attentions are aligned with that algorithm’s objective. More often today, an organization’s data-transforming algorithm is even more helpful for optimizing some other objective -- such as maximizing shareholder profit or filling political party coffers. Please see the appendix for more context.

But algorithms are not just for organizations trying to sell you stuff. You should identify, test, and periodically update an intuitive set of personal algorithms to make a lifetime of good decisions. Personal algorithms are an intuitive set of rules you use to transform your data. Your personal algorithms are either informal or, more necessary today, enhanced with the help of personal decision tools. Together, we will build an intuitive understanding of data in the service of implementing your personal algorithms. Our focus is on using the statistical moments as a bedrock for that data understanding. Our data exploration and personal algorithms will be enhanced by tools and methods, such as Bayesian inference, the Pareto Principle, and choice architecture tools like Definitive Choice. Choice architecture helps implement your personal algorithms.

Choice Architecture and Personal Algorithms.

Behavioral economist and Nobel laureate Richard Thaler said:

“Just as no building lacks an architecture, so no choice lacks a context.”

A core behavioral economics idea is that all environments in which we make a choice have a structure. That structure impacts the decision-maker. There is no "Neutral Choice" environment. People may confuse not making a decision as safer. Not making a decision is no safer, and likely much less safe, than actively making a decision. The choice environment is guided by the subtle incentives of those providing the environment. Those providing the environment almost never have incentives fully aligned with your welfare.

Once you accept this premise, you will see the world differently.

Let's explore a retirement savings example. Many companies provide 401(k)s or similar tax-advantaged retirement plans as a company benefit. As part of the new employee onboarding process, the company provides its choice architecture to guide the employee to make a voluntary retirement selection. Next, we will explore the retirement plan selection process from both the employer's and the employee's perspective.

A company may provide many mutual funds and investment strategies to assist employees in making a retirement plan decision. Their rationale for the volume of choices is partly the recognition that all retirement needs are unique to the individual's situation. The company does not want to influence the employee with a particular retirement strategy. They want to ensure the employee's choice is informed by a wide array of possible alternatives. The well-intended volume of choices should help the employee.

But does it?

But let’s look at it from the employee’s standpoint. This choice environment, with its high volume of complicated-looking alternatives, seems noisy and overwhelming. Even though, the plan administrator likely provides some means of filtering those choices. The overwhelming noise perception occurs because the employee is not accustomed to making retirement plan decisions. The volume of alternatives amplifies the impact on their negative perception. Also, their attention is more focused on being successful in their new job. In fact, research shows, this sort of choice environment discourages the employee from selecting ANY retirement plan. A typical employee narrative may be: "300 choices!? Wow, this looks hard! Plus, I have so much to do to get onboarded and productive in my new company. I will wait until later to make a decision." ... and then - for many - later never comes.

A complicated, overwhelming choice environment causes savings rates to be less than what they otherwise could have been. A compounding factor is that, traditionally, the default choice provided by the employer is for the employee NOT to participate in the retirement program. This means that if the employee does not complete some HR form with a bunch of complicated choices, then they will not save for their own retirement. Behavioral economics research shows this intuitive outcome. When the choice environment is more amenable, people are more likely to choose options benefiting them. [i-a1]

Retirement plans are just one example. This sort of choice environment challenge appears in subscription cancellations, insurance claims, rebate redemptions, and medical billing. In each case, complexity discourages action, increasing profits through consumer inaction. These are low-alignment choices: your gain often comes at the company’s loss, so the choice design is tilted against you.

By contrast, high-alignment choices occur when the company benefits directly from your action. Think of Amazon when you buy a consumer product or Netflix when you stream a movie. In these cases, companies invest heavily in making the choice process smooth, seamless, and even addictive. Their profitability depends on your engagement, so frictions are removed, interfaces are simplified, and nudges encourage more consumption.

Thus, the overwhelming noise perception is captured in the behavioral truisms:

A difficult choice that does not have to be made is often not made.

- and -

Not making a choice is a choice.

Even though, like in the case of a retirement plan with employer matching and tax advantages, making almost ANY choice would be better than making no choice.

Regarding company incentives, the company will usually match employee contributions to the retirement plan. So if the employee does not participate, the company will not need to contribute. An employee not participating reduces the company's retirement expense. Thus, the unused match will drop to the bottom line and be available to the equity owners. A company's default choice environment is a function of its complex incentives and self-interests. As discussed in the appendix, the employee is only one of four beneficial stakeholders vying for the attention of company management. Thus, based on a one in four equally weighted average, management's stakeholder balance will not favor the employee.

Regarding the company’s management relationship with its stakeholders, an unused benefit is like a double win - providing two for the price of one!

Win 1 – The company put the benefit on the table. If an employee chose not to use it, that is on them. We cannot make them save.

Management narrative: Good for the employee.

Win 2 – If the employee does not use the benefit, then the unused benefit drops to the bottom line. Management narrative: Good for the shareholding equity owner.

Retirement planning is one of many choice-challenged examples we face daily. Research suggests that thousands of choices are made daily. [i-a2] The essential point is that modern life is characterized by overwhelming data abundance to influence those choices. As we discuss later, our smartphones and other devices are like data firehoses - spewing data on the user. Whether retirement or many other important choices, the volume and complexity of those choices often discourage normal people from making any choice. The default has become the standard and that standard is set by organizations usually not fully aligned with your welfare.

In the world of corporate marketing and accounting, there’s a term for when the choice environment enables a consumer not to take advantage of an earned benefit. That term is—appropriately—Breakage. A classic example is airline credit cards. These rebate programs are huge money makers not only because of interest charges, but also because customers often fail to redeem the travel benefits they’ve earned. This unused value becomes pure profit for the company.

But Breakage doesn’t happen by chance—it is often the result of intentionally poor choice design.

This brings us to a powerful concept in behavioral economics known as Sludge. Sludge refers to friction that makes it challenging for people to do what’s in their best interest—like redeeming rewards, canceling a subscription, or accessing a benefit. While “nudges” are used to help people make better choices, sludge works in the opposite direction. It manipulates users by exhausting their time, attention, or motivation, often leading to inaction that favors the company.

For example, with airline reward programs, there’s nothing preventing companies from offering a simple cash-back equivalent instead of complex travel restrictions. But the travel reward’s friction—booking limitations, blackout dates, expiration rules—is by design. That’s sludge in action, and its goal is Breakage.

Why This Matters

Sludge and Breakage remind us that the choice architecture created by companies is rarely aligned fully with your best interests. It is built to optimize their metrics, and while consumer outcomes may be part of that objective, they are easily crowded out by the louder demands of other stakeholders competing for management’s attention.

That’s why constructing a choice architecture tailored to your needs is essential. Whether it is setting personal rules for redeeming rewards, automating beneficial behaviors, or using tools that clarify complex decisions, you must take control of your decision environment. In other words, you need a personal algorithm—a system that helps you make confident, consistent, and welfare-enhancing decisions.

Don’t let someone else’s sludge become your lost opportunity.

To explore AI and personal algorithms in the context of making a pet decision, please see:

Data is the foundation.

On the way to implementing or updating your personal algorithms, we must begin by providing a bridge to build your data foundation. Personal algorithms are greatly improved when the beneficiary of those algorithms has a solid understanding of data and statistics. This is the essential data bridge - spanning from the land of choice-challenge to the successful decision-making happy place.

Motivation connects our past data

to our algorithmically influenced future

In my college personal finance class, part of the curriculum is to help students understand the interaction of data, the power of organizational algorithms, and how to leverage or overcome them with a personal algorithm-enhanced decision process.

From data scarcity to data abundance

In the last half of the 20th century, the world shifted from the industrial era to the information era. The changing of eras is very subtle. For those of us who lived through the transition, there was no official notice or “Welcome to the Information Era” celebration. It just slowly happened—like the proverbial boiling frog—as innovation accelerated and our culture adapted. Era changeovers are always backward-looking, often recognized only after the effects have become historically visible.

This shift forces us to rethink how we relate to data, beliefs, and decisions. For most of human history, information was scarce, and our brains evolved to thrive in such conditions. Over just a few decades, scarcity has flipped to abundance. Data is no longer rare; it is relentless. Yet our genome remains wired for data frugality, not flood. Evolution may take millennia to adapt, but we cannot wait. We must train our minds to function in this new landscape, where the mismatch between biology and environment has widened the cognitive gap. The challenge—and opportunity—is to close this gap with better tools and frameworks for decision-making.

In the industrial era, computing power was limited. As a result, math education focused on training people to be the computers. We were the gap fillers for society’s increasing computational demands. Schools prepared students to perform functions that machines could not yet execute. But that has changed—dramatically.

In today's information era, computing power is both ubiquitous and cheap. Moore’s Law exponentially boosted processing speed, but the true unleashing of data abundance came when bandwidth exploded, enabling information to flow effortlessly across people, systems, and geographies. No longer is data trapped in isolated silos. Instead, cloud platforms and APIs make it instantly portable, shareable, and monetizable. The bottleneck is no longer processing power or access—it is attention. In this new economy, attention—not data—is the scarce resource.

In both my college and high school classes, I facilitate an exercise where students share their smartphone screen-time reports. Many reveal usage well over five hours per day. They also admit much of this time is “low investment”—a donation of their attention to the profitability of social media platforms rather than to their own growth.

The same pattern plays out more broadly. Consumers routinely trade personal data and precious attention for convenience or entertainment. This “Dopamine Trade” fuels a symbiosis between artificial and biological intelligence, with companies fine-tuning algorithms designed to capture and redirect our neurobiology.

Data abundance defines the modern information era. Success now depends on identifying your essential data, filtering out the noise, and using computing tools—both machine and cognitive—to make decisions with clarity, confidence, and purpose.

See: A Content Creator Investment Thesis - How Disruption, AI, and Growth Create Opportunity This article provides background for why people are willing to give up their data to the social media platforms.

For most people, today's challenge is no longer about learning to do the work of a computer, as was emphasized during the industrial age. Instead, the challenge is learning how to harness abundant data, apply modern technology, and focus scarce attention toward human-centered, adaptive decision-making. Yet our formal math education systems remain slow to evolve. They continue to emphasize deterministic, rule-based computation rooted in an industrial era when information was scarce and processing power was expensive.

This misalignment is not just a delay—it’s a structural problem. As Upton Sinclair famously noted, "It is difficult to get a man to understand something, when his salary depends on his not understanding it." The same logic applies to organizations. Institutional incentives—like tenure systems, standardized testing, and curriculum mandates—reinforce the status quo. Risk-aversion, bureaucratic inertia, and fear of political controversy further discourage reform. Even well-meaning educators are often constrained by legacy systems that reward compliance over innovation. [i-c]

This is why meaningful change in education rarely comes from within. It comes from outside—from those who see a better way to meet the demands of the information era and act on it. These are parents, technologists, behavioral scientists, entrepreneurs, and lifelong learners who understand that data literacy, statistical thinking, and decision science are the new pillars of economic and personal success.

Sal Khan, founder of Khan Academy and author of The One World Schoolhouse, has shown how millions of students can thrive through personalized, mastery-based learning. As he warns: “Schools today are still organized like factories, with rigid schedules and standardized pacing. But learning should be personal, flexible, and mastery-based. The one-size-fits-all model is obsolete in the information age.”

So, will the traditional education system eventually change? Sure—but only after it's dragged forward by those already building the future. Legacy systems don’t typically transform; they wither as their irrelevance grows. That’s why the responsibility to adapt rests with us. If your formal education didn’t teach you how to think statistically or make structured decisions, now is the time to build those skills yourself. Because the future doesn’t wait—and neither should you.

The big change – From data scarcity to data abundance

Data scarcity was when the most challenging part of a decision was collecting data. The data was difficult to track down. It was like people were data foragers, where they filled a basket with a few pieces of difficult-to-obtain data they needed for a decision. Since there was not much data, it was relatively easy to weigh and decide once the data was located.

Data abundance has changed our relationship with data 180 degrees in just the last few decades. Consider your smartphone. It is like the end of a data firehose. Once the smartphone is opened, potentially millions of pieces of data come spewing out. Plus, it is not just smartphones; data is everywhere. But it is not just the volume of data, it is the motivation of the data-focused firms. The data usage has a purpose... and that purpose is probably not your welfare.

"The best minds of my generation are thinking about how to make people click ads. That sucks." - Jeff Hammerbacher, a former Facebook data leader.

The challenge is no longer foraging for data. Our neurobiology, as tuned by evolution, is still calibrated to the data scarcity world. It is like no one told our brains that how we make decisions is dramatically different today. The challenge is now being clear about which of the overwhelming flood of data is actually needed. The challenge is now to curate data, subtract the unneeded data, and use the best decision process. Unfortunately, the education curriculum often teaches students as if we are still in the data scarcity world.

Economics teaches us that what is scarce is what creates value. So, since data is abundant, what is it that creates value? In the information era, it is scarce human attention creating value for companies trading in data abundance.

For a "Go West, Young Man" decision made during the 1800s as compared to a similar decision today, please see the article:

The big change – From data scarcity to data abundance

The Big Change: Scarcity, as an economic lever, has changed from data to attention.

The Architecture of Belief

Think of belief as a blend of habit’s convenience and fast information processing. A belief acts like a pre-packaged bucket of information—ready to trigger action with little deliberation. Because it is wired for speed, belief lives in the intuitive parts of the brain that favor immediacy over language. This efficiency comes at a cost. Our neurobiological belief system offers weak cognitive signals when it is time to update, leaving many beliefs to persist long after their usefulness has expired. Later, after we build a foundation with statistical moments, we will introduce Bayesian Updating and other decision technologies as tools for strengthening this belief-updating process.

Equally important is where beliefs come from. In childhood, they are handed down by families, cultures, and communities of origin. These inherited anchors are powerful—sometimes constructive, sometimes harmful. Because beliefs operate quickly and often unconsciously, we may carry them into adulthood without scrutiny. Yet all healthy adults must eventually take ownership of their beliefs, questioning inherited ones and updating them as situations demand. For the childhood belief updating framework, please see:

This makes statistics the language of interpreting our past reality in the service of updating those beliefs. Like any other language, the language of statistics has grammar rules. Think of statistical moments as the grammar for interpreting our past realities. The better we understand the grammar rules, the better we can:

Learn from our past reality,

Update our beliefs, and

Make confidence-inspired decisions for our future.

‘Past reality’ may be a nanosecond ago—just long enough for the light of the present to reach your eyes. Or it could be the accumulated experience passed down from distant ancestors encoded in your neural architecture. Either way, our brains are not simply recording devices—they are predictive engines, constantly using past data to anticipate what comes next.

Consider hiking through a nearby wilderness area. The trail is technical—roots, rocks, inclines, and declines make every step a decision. Your brain, trained by evolution, handles this effortlessly. With each footfall, it looks a fraction of a second ahead, evaluates multiple options, and selects the best one to keep you upright and moving forward. Over a few miles, this happens tens of thousands of times. People often think they are tired because of the physical effort—but it’s the cognitive demand, the relentless stream of micro-predictions, burning many of the calories.

Populations, samples, and diversity

Whether making decisions impacting the next moment for your hike or impacting your life direction years from now, this shows why introducing the right data to your brain—and doing so clearly and consistently—is critical for better outcomes. To make sense of the right data, we must first define the categories that organize it—this begins with understanding how populations and their characteristics are statistically described. A group of people is known as a population. Populations are described by distributions, which tell us how often unique traits occur. These patterns help us calculate probabilities, enabling more accurate predictions of future outcomes.

We may share broad similarities as humans, but it’s our unique variations that matter most in decision-making. Understanding those variations—and how they shift over time—is at the heart of statistics. The more effectively you connect your brain to meaningful, structured data, the better your predictions—and your decisions—will become.

Diversity goes beyond typical characteristics, like gender, race, and eye color. Even more impactful is our diverse behavior generated by the uncertainty we face in our lives. Those uncertainty characteristics include:

a) the incomplete and imperfect information impacting most situations,

b) the dynamic, interrelated nature of many situations, and

c) the unseen neurobiological uniqueness we each possess.

This means even the definition of rationality has been redefined. There was once a belief that rationality could be calculated as a single point upon which all people converge. This 'robotic' view of rationality was convenient for mathematics but did not accurately describe human behavior. Instead, today, rationality is more accurately understood through the eyes of the diverse beholder. The same individual is often diverse across different situations because of uncertainty, framing, and anchors. This means the “you” of one situation is often different than the “you” of another situation because of our state of mind at the time the situation is experienced and how seemingly similar situations inevitably differ. Certainly, the different "us" of the same situation and time are also divergent, owing to individual neurodiversity.

This graphic is an excerpt from the article: Becoming Behavioral Economics — How this growing social science is impacting the world

Our hunt is to understand the population's diversity by learning about its past reality and by applying our unique and varying rational perspectives. But rarely can data be gathered on the entire population. More often, we must rely on samples to make an inference about the population. Next, the challenges of reducing the population to a sample are explored.

Tricky samples and cognitive bias

Sample data drawn from others in the population can be difficult to interpret. Because people are so diverse, traditional statistical tests—t-tests, ANOVA, and related comparisons—can sometimes mislead if their assumptions do not fit the real world. This is why understanding statistical moments as the bedrock of these tests is essential. They clarify what the test is really measuring, and where hidden assumptions may be shaping the results. Just as importantly, they remind us that each of us carries unique priors. If those priors are ignored, the inferential quality of statistical tools diminishes.

Complicating matters further, our brains are prone to sampling tricks. Even a single experience—a “sample of one”—can feel representative, triggering representativeness bias. This tendency to overgeneralize easily connects to a deeper, more pervasive bias: confirmation bias. Under situational uncertainty, framing, and anchoring, we selectively notice and elevate samples that appear to validate our prior beliefs, while discounting others. The problem has only grown in the information age, where an abundance of data makes it easier to find a slice of evidence that “proves” what we already believe.

The goal of this article is to build a baseline understanding of statistical moments and then push beyond—applying them in a diverse world where assumptions matter and priors differ. For readers seeking more technical grounding, see our Statistics Primer. Key sections—“Population vs. Sample,” “Hypothesis Testing,” and guidance on t-tests and ANOVA—offer tools to judge whether a sample is representative and whether observed differences are meaningful. Used with care, these methods improve inference from both personal experience and larger datasets.

Next is a typical decision narrative descriptive of the environment leading to confirmation bias and a less-than-accurate decision:

Notice, this is the first time we present the variable X to represent the situation and the variable Y to represent outcomes. The X–Y framework is central to the following statistical moments sections. This narrative is typical of how people experience their decision environment and motivations. The challenge is the past environment influencing a situation, which leads to an outcome, represents only a single observation within the total population. Your sample size of one is likely too small and unique to make a robust inference. To be clear, this does NOT mean your past experience has no decision value... of course it does. Our evolutionary biology is wired such that being inaccurate and alive is better than being accurate and dead. However, blindly following our past experiences as a guide to the future may not include other past realities to help inform our decisions. Thus, except for life and death situations, using the wisdom of the crowd is often better than not.

When a sample size of one is the best decision approach: When my children were young, my wife and I took family trips to Manhattan near Central Park. Our home was in a much less dense Washington DC suburb. So our New York City experience was very different than our suburban customs. We took long walks on the gridded Manhattan streets. Not infrequently, a car would not yield the right-of-way to us walkers. It was scary. We needed to have our heads on a swivel before stepping off the curb.

This annoyed my children. They wanted to know why we had to be so careful because it was the cars that broke the rules. My response was: “It is always better to be wronged and alive than right and dead.”

With the exception of those life-and-death examples, the sample size of many is a more accurate decision approach. Robyn Dawes (1936-2010) was a psychology researcher and professor. He formerly taught and researched at the University of Oregon and Carnegie Mellon University. Dr. Dawes said:

"(One should have) a healthy skepticism about 'learning from experience.' In fact, what we often must do is to learn how to avoid learning from experience."

Properly understanding your past reality in the present decision context is doable with the appropriate decision process. Part of being a good data explorer is using a belief-updating process including a suitable integration of our and others' past reality. A proper decision process helps you avoid confirmation bias and achieve conviction in your decision confidence.

Think of confirmation bias as a mental shortcut gone bad. Most mental shortcuts provide effective or at least neutral heuristic-based signals. [iii] Referring back to that car lesson with my children, my instinct to instruct my children for safety is a helpful and instinctive heuristic. I seek to protect my children without really thinking about it - well, except for that pithy response about the right and the dead. But confirmation bias occurs when a mental shortcut leads us to make a poor decision. As the next graphic illustrates, confirmation bias occurs when only a subset of evidence is used to make a decision. While the current set of information may be convenient and apparently confirms a previous belief, the decision-maker ignores a fuller set of data that may be contrary to the existing belief. This kind of cherry-picking bias leads to a reasoning error called an error of omission. Errors of omission are tricky because, technically, the subset of information is not wrong; it is simply incomplete to draw the appropriate conclusion.

A politician's example for reasoning errors: Fact-checking is often done to detect incorrect statements of the data the politician provides. A false statement is also known as an error of commission. However, the challenge is not necessarily what the politician said, but what the politician did NOT say. Politicians regularly engage in providing incomplete fact sets. Errors of omission are a) different than their error or commission cousins and b) generally tolerated or not detected by the public. Politicians regularly and conveniently leave out data - an error of omission - when trying to sell a particular policy or campaign plank.

Could you imagine a politician saying, “Here are all the reasons why this is a great policy decision! But wait! Here are several other reasons that may make this policy decision risky and potentially not effective. There are many tradeoffs. The chance of success depends greatly on the complex and unknowable future!” We value leaders who govern honestly. There are complex facts and tradeoffs necessary to make a great decision. But a wishy-washy candidate would struggle to get elected. Political theater and a complete rendering of complex policy decisions are very different.

It is not clear whether the politician is selfishly motivated to commit errors of omission, as part of a goal to grow their power base. Alternatively, those errors may be selflessly motivated, recognizing that most people need help clarifying complex situations. It is likely some of both. However, regardless of the politician's motivation, errors of omission are rampant.

Bertrand Russell (1872-1970) - the late, great mathematician and philosopher's timeless aphorism reminds us of the politician's reasoning challenge:

"The whole problem with the world is that fools and fanatics are always so certain of themselves, and wiser people so full of doubts."

For the successful data explorer, recognizing confirmation bias is not enough—we must also understand why it is so difficult to detect. Cognitive biases arise from the emotional parts of the brain lacking language. [iv-a] Aside from a vague feeling, we receive no clear signal when operating under their influence. This makes it easy to misweight emotion-based data; sometimes leaning too heavily on it, other times dismissing it entirely.

Statistics provide the corrective lens. They expand perspective beyond the narrow and potentially biased samples of personal experience. By integrating broader data with our own impressions, we can correct for bias and adopt an outside-in view—one that moves us closer to reality than intuition alone ever could.

Please see the following VidCast for more information on how confirmation bias leads to reasoning errors. This VidCast shows the slippery slope of how confirmation bias may devolve to cancel culture and allow others to determine an individual’s self-worth. Political leaders may aspire to this level of followership. Social Media echo chambers are a hotbed for confirmation bias and cancel culture.

To summarize, the case for being a successful data explorer:

a) data exploration is important in the data abundant / attention scarcity era,

b) data exploration is tricky to manage,

c) data exploration requires a statistical understanding, and

d) data exploration benefits from a robust decision process to appropriately manage.

The majority of the article is an intuitive primer for a core descriptive statistics framework called statistical moments. For those interested in the mathematical foundations behind the concepts discussed, please see our Statistics Primer. The primer is helpful for a deeper understanding but not necessary to gain value from the rest of this article. Following the statistical moments sections is an introduction to applying these moments to your ever-changing and diverse life -- called Being Bayesian and Practicing Pareto.

From Certainty to Wonder: The Incremental Knowledge of Statistical Moments

The method of moments shows how knowledge builds step by step. Each successive moment adds a new layer of insight into a distribution. The paradox is that while the earliest moments provide certainty, the higher moments teach us more about uncertainty—they sharpen our awareness of what we do not fully know.

The 0th moment guarantees certainty at the most basic level: the probabilities of all outcomes sum to one. Something will happen.

The 1st moment adds another kind of certainty: it anchors us with the distribution’s center, its balance point.

From the 2nd moment onward, the knowledge shifts. We move from certainty about “what is” to awareness of “what could be.” Variance reveals risk and dispersion. Skewness introduces asymmetry—outcomes leaning one way or another. Kurtosis exposes fat tails, where rare and extreme events dwell. Each higher moment increases our grasp of shape, but also forces us to admit how much of the world lies in uncertainty.

This paradox—that learning more makes us aware of how much we do not know—is central to both statistics and life.

Mathematician William Byers describes science itself as a continuum. [ii] At one extreme is the science of certainty, where laws and models provide predictable clarity. At the other extreme is the science of wonder, where mystery, uncertainty, and possibility dominate. The statistical moments trace this same continuum. At the left end, the first moments describe a more certain world. As we move rightward, risk, variability, and uncertainty increasingly enter the picture. At the far edge, unknowable fat tails and ignorance give way to wonder.

How statistical moments map to science

0th–1st moments: Certainty — the grammar of probability ensures outcomes exist and defines a center.

2nd moment: Risk — known dispersion around the mean makes outcomes more or less reliable.

3rd–4th moments: Uncertainty — asymmetry and fat tails push us into less predictable/more unknown territory.

Higher moments: Wonder — extreme irregularities and ignorance remind us that models can never capture all possible outcomes.

The remainder of this article explores the statistical moments along this continuum, proceeding from the science of certainty to the science of wonder, and ultimately to the practical task of managing ignorance.

2. Don't Be a Blockhead

0th moment: unity

We now begin our exploration of the statistical moments, starting with the 0th moment. To guide this journey, we use two tools: the Big Block, representing the set of all possible outcomes, and the X–Y framework, where X is the situation carried from the past into the present and Yn the possible outcomes extending into the future. These tools provide a consistent way to interpret each moment, from the baseline of unity to the higher moments that add center, spread, asymmetry, and shape.

The 0th statistical moment represents unity—the fact that the probabilities of all possible outcomes sum to one. It tells us nothing about center, spread, or shape; it simply ensures that something will occur. Like conservation laws in physics, it is the baseline condition: existence without structure. In this sense, unity resembles a state of maximum entropy—where order dissolves into uniformity. Just as black is the absence of light, unity is existence stripped of attributes. It is the inert default, closer to death than to life. This contrast highlights why the higher moments matter—because they describe the information and structure that make life possible.

Unity refers to a block of unknown outcomes: our life has situations lacking certainty but able to be understood with probabilities. These probabilities describe potential differing decision path outcomes, like—“If I do X, then Y different outcomes could occur. Each outcome path Yn carries its own unique ‘will it happen’ probability.” Because we have imperfect information and the world is dynamic, the X situation has a set of Y outcomes, including some unknown outcomes. Unity means that, while we may not be able to anticipate a situation’s outcome, something WILL happen. In the unity state alone, we are unable to differentiate the set of potential outcome paths. They are mushed together like a big blob. Differentiating potential outcomes will come in the later statistical moments.

Keep in mind that any given situation, X, often involves many attributes. Formally, we could represent this as Xn, with n signaling the multiple factors shaping a situation—ranging from the influence of others to our own genome and earlier environment. For simplicity, we will roll these attributes into a single representation, X, the situation itself. This X–Y framework and the “Big Block” will be used throughout the statistical moments discussion.

Think of X as the input situation carried from the past into the present, and Yn as the possible outcomes carried from the present into the future. In our framework, we collapse the multiple attributes of X into a single fixed state—the current situation you face. This reflects the idea that your past has already occurred and, for our purposes, is assumed fixed. Like sunk costs in economics, nothing can change it, even if some details remain unknown to you. What matters is that you retain full agency in the present to shape future outcomes. The choices you make today can still influence which of the multiple Yn paths becomes reality.

Uncertainty enters in two forms. Epistemic uncertainty arises from gaps in knowledge about the current situation X: information may exist, but is not known to you at the time of decision. Aleatory uncertainty arises from the inherent randomness of future outcomes Yn, which cannot be eliminated even with perfect information. In other words, the past is fixed, but our understanding of it may be incomplete (epistemic), and the future is inherently variable (aleatory).

An analogy may help. Imagine you are playing cards. The hand you hold is your X. The cards are fixed—you cannot change them—but if you have not looked carefully, epistemic uncertainty clouds your knowledge of what you already hold. Once you play, the draw and the responses of other players introduce aleatory uncertainty—random outcomes beyond your control. In this way, X is shaped by past constraints, while Yn reflects the variability of what comes next. Keeping this X–Y framework in mind will help clarify how statistical moments describe both what we know and what remains unpredictable.

Earlier in my career, I had a wise boss named Bill Minor. Bill ran a Mortgage and Home Equity operation for a Wells Fargo legacy bank. Bill was famous for saying:

"Not making a decision is a decision."

While Bill may not have been aware of it, he was describing unity. His saying means that regardless of whether or not we make an explicit decision, an outcome is inevitable. In his own special way, my former boss was encouraging me not to be a blockhead.

When the situations of our lives blur into a single block of unknown outcomes, we lose clarity. This low-fidelity view is the ultimate “forest versus trees” challenge. Unity sits at such a high level that all potential outcomes—the individual trees—collapse into one indistinct forest. In that state, the present may feel overwhelming because the paths forward are hidden, even though multiple outcomes remain possible.

Unity, like the other moments, has a basis in physics. Unity corresponds to maximum entropy, void of the diverse structures necessary to support life. Higher entropy is associated with random disorder which, in a human being, pertains to death. Lower entropy is associated with the order needed to support life’s rich diversity.

It may seem strange that order supports diversity. It is our highly ordered cells, neurons, DNA, and related building blocks making up our skeleton, organs, muscles, and other human structures. It is those highly ordered building blocks allowing our human structure to be so different. Think of them like Legos. Each Lego may be simple and uniform, but in massive numbers, they enable an astonishing diversity of structures. Our Lego architect is natural selection, working on the blueprint of the genome shaped by the environment. Without that ordered foundation, we would all just be part of the same undifferentiated primordial soup.

Religions and philosophies have long tied this high-entropy unity to both the beginning and the end of life. The Bible describes humans as returning to dust—a uniform, entropic state—after death: Dust is the uniform, high entropy default state from which lower entropy life arises and ultimately returns: "By the sweat of your brow you will eat your food until you return to the ground, since from it you were taken; for dust you are and to dust you will return." - Genesis 3:19

Stoic philosophers echoed this truth. As Seneca wrote: “‘does death so often test me? Let it do so; I myself have for a long time tested death.’ ‘When?’ you ask. Before I was born.” - Seneca, Epistles 54.4-5

Appreciating unity is easier when contrasted with diversity. Darwin helped the world understand how diversity in life is sustained by natural selection and genetic mutation. Mutations introduce variation, while natural selection favors the traits that improve survival and reproduction. Over generations, this process drives the complexity and richness of life.

Going beyond moment 0: Why is diversity important? Just as diversity in biology sustains life, diversity in human activity sustains prosperity. If all people were the same, there would be no gains from trade. As Russ Roberts put it: “Self-sufficiency is the road to poverty.” David Ricardo’s theory of comparative advantage reinforces this—showing that even if one person or nation is better at everything, mutual gains arise when each specializes in what they do best. Our diversity makes markets work, and money is how we vote for the specialties that best reduce our entropy. [iv-b]

Given human life expectancy has more than doubled in the last 200+ years and global economic output (GDP) has increased 50x during that time, our entropy-reducing prosperity has rocketed ahead because of diversity-enabled market economics and the benefits of trade.

The 0th moment reminds us that unity alone is inert—existence without attributes, high entropy without structure. It is the foundation of probability but not yet the source of meaning. Only by moving into the higher moments do we begin to separate the forest into trees, the blob into structured outcomes, and existence into life. Unity is the block. The higher moments carve that block into the patterns that sustain diversity, choice, and prosperity.

To explore the impact of entropy across our lives, including the sources for the growth in global economic output and life expectancy, please see the article:

To conclude the unity section, the next graphic shows the resolution for the Big Block. People have an amazing potential to see through the Big Block as a structured set of possible outcomes. By applying the lens of statistical moments, we gain more than just an understanding of life’s knowns (Y1)—we also learn to quantify risk (Y2), manage uncertainty (Y3), and guard against the blind spots of ignorance (Y4). The language of data and its grammar rules empower you to interpret your likely outcome paths with greater clarity and confidence. This deeper understanding helps you make better decisions and get the most out of your life.

Building on our discussion of epistemic and aleatory uncertainty, we now introduce the HRU framework as a way to organize data into a useful structure—distinguishing between what we know and what we do not know, both today and in the future. This framing helps reveal how the statistical moments provide clarity for decision-making in environments that are less than certain.

The next graphic describes the Big Block’s four Y outcome types. These four paths are laid out along two time-based dimensions: (1) the situation—what we know today—which aligns with the X plane in the Big Block, and (2) the outcome—what the uncertain future holds—which corresponds to the Yn outcome paths. This aligns with standard statistical notation, where X represents independent variables (inputs or conditions), and Yn represents dependent variables (future outcomes).

The HRU framework serves as the interpretive key to the Big Block, mapping each Y-path to one of four outcome types: Y1 – Known, Y2 – Risk, Y3 – Unknown, and Y4 – Ignorance. It builds on those same X and Y dimensions to help decision-makers categorize situations and anticipate uncertainty. These outcome types correspond with the statistical moments that follow—each moment offering a lens to interpret different distributions and behavioral patterns. The HRU framework does more than label uncertainty—it enables confident, structured decisions through intentional information curation and belief updating.

For more information on how to manage certainty and uncertainty in our many life situations, please see the HRU framework. The HRU framework is described in the article:

The remaining statistical moments will refer back to "The Big Block" and the four Y outcome types:

Y1: Known → 1st moment, Expected Value

Y2: Risk → 2nd moment, Variance

Y3: Unknown → 3rd moment, Skewness and 4th moment, Kurtosis

Y4: Ignorance

3. Our Central Attraction

1st moment: the expected value

Next, the first moment measures how a diverse distribution converges toward a central point. This is the simplest description of a diverse population. This is where a known single, solid white path (Y1) is drawn through the big block. As will be shown in the following Galton Board example, gravity is the great attractor toward the center. There are 3 different central point measures, known as the average. The different average measures are the 'central tendency' descriptors of a distribution - that is - the mean, median, and mode. Each average measure is a little different and their difference gives us clues to consider when applying the remaining moments.

Y1 Known Path

In a normal distribution, the mean, median, and mode align. The degree to which they diverge offers an early signal of non-normality, often pointing to skew. Yet this signal alone is incomplete. It is like checking only the average score on a class test while ignoring how widely scores are spread or whether a few extreme results distorted the picture. To truly understand the shape of a distribution, we need the fuller grammar of the other statistical moments -- variance, skewness, and kurtosis.

The normal distribution is a natural standard often used as a comparative baseline between distributions. This standard originates from the physics of calm, natural environments with independently moving molecules and atoms. Normality is that calm, constant-gravity state before excess energy is added to a system. That normal-impacting energy may certainly result from human intervention. Since humans intervene with many systems, we can expect the three average measures to differ.

Is the normal distribution a misnomer? The word 'normal' in "normal distribution" may seem like a misnomer. Since normal distributions are the exception in human affairs, not the rule, perhaps a better name would be the "abnormal distribution" or the "unusually calm distribution."

When considering a distribution, its central tendency measures should be compared to (a) the benchmark of normality, (b) each other, and (c) the context of that distribution’s past reality. This past reality functions much like priors in Bayesian inference, serving as a starting point for interpreting new evidence—a theme we will return to later in the article. These 3 comparisons give us clues to interpreting data the way an artist paints a picture. The tools for an artist are paint and a paintbrush. However, for our intrepid statistical moments language interpreter, their tools are data and statistics. These clues are only a starting point. The clues suggest a line of inquiry involving the other statistical moments.

The data interpreter to artist comparison. The next picture shows a window looking out to a mountainous landscape. That landscape is filtered by a black screen covered with data. That data describes the landscape. It is the statistics that help our data interpreter understand that landscape through the data.

Similarly, let’s say our data interpreter was also an artist. They would use paint that represents the colors and textures of what they see in that mountainous landscape. Our data interpreter turned artist will then use the paintbrush to apply that paint to the canvas as their understanding of that landscape.

In this way, data and statistics are just another way for us to interpret our world… like the way an artist interprets the world through their painting.

An example of using the central tendency measurements: Please consider the retirement age of the American population. The retirement population tends to bunch around the mid-60s years of age and then have a long tail toward the end of life - in the mid-80s or beyond. This means most people retire in their 60s, but some wait longer or work to the end of their life. The U.S. Government provides incentives—such as Social Security—that enable retirement in one’s mid-60s. These incentives function as behavioral attractors, nudging large populations toward a common outcome. In physics and dynamical systems theory, an attractor is a state or set of conditions toward which a system naturally evolves. Chaos theory extends this concept to complex systems, where even unpredictable behaviors are drawn toward underlying patterns or paths. We will explore how such attractors influence statistical distributions and decision dynamics in later sections. But not everybody can or wants to retire in their 60s. As a result, the mean age will be higher than the median age. This makes sense in the American cultural context. Think of the American retirement culture as a human intervention causing the central tendency measures to differ. But what if we saw a retirement distribution where the mean and median were much closer together than in the typical American retirement context? What should be concluded? Relevant questions are:

Is this an American population or perhaps a different culture that does not intervene by relating retirement to not working? In many cultures outside the United States - they either do not have a word for retirement, their definition of retirement relates to change or growth, or retirement means support for people as they age and in their ability to be productive throughout their life. [v]

Perhaps there is a measurement error. Maybe American retirement activities are being improperly recorded as work in the data. Also, the opposite could be true for that initial data set showing the mean-median skew. Perhaps Americans are working after retirement age but their activities are not captured as work. For example, if someone volunteers at a children's hospital, should that activity be considered work? Just because someone does not get paid does not mean that activity does not either a) create economic value on par with paid activities or b) provide "payment" in non monetary form, like feeling good about helping children.

If it is an American population, are there context clues as to why the population's central tendency measures are not as expected? Perhaps this is a more agricultural community where retirement age and practices are more tuned to the natural rhythms of the farm. Thus, because there is little government impact, the people's attitudes toward retirement are less dependent on a central government policy and more attuned to life's rhythms.

What is the definition of retirement? Are the 2 datasets using the same definition?

What can the other statistical moments tell us about this population? The other statistical moments will be explored later.

Thus, retirement programs are a social intervention causing less normal distributions. Less normal distributions can be interpreted by using the remaining statistical moments. But first, the normal distribution as a natural standard will be explored with a wonderful simulation. Unlike the retirement distribution, this is where the 3 central tendency measures are the same.

A population simulation - the normal distribution as a natural standard. The Galton Board was invented by Sir Francis Galton (1822-1911) [vi]. It demonstrates how a normal distribution arises from the combination of a large number of random events. Imagine a vertical board with pegs. A ball is dropped on the top peg. There is a 50% chance, based on gravity, that the ball will fall to the right or the left of the peg. The '50% left or right' probability occurs on every peg contacted by the ball as it falls through the board. Think of each peg as representing a simple situation where there are only 2 possible outcomes within the block, left or right. Gravity is the operative natural phenomenon being captured by the Galton Board's design. After many balls are dropped, the result is a normal distribution. More balls are found central than on the outliers of the distribution.

This shows what happens when elements of nature, like atoms or molecules, act independently in a calm, natural system. The outcome often resembles a normal distribution. In the Galton Board, the 'elements of nature' are represented by the balls. The 'natural system' is represented by gravity and the pegs.

Feel free to play with the Galton Board simulation next. Below the box, please activate the "high speed" and "histogram" boxes. Then activate the single arrow to the far left to initiate the simulation. Watch as the Galton Board works its magic. The result is a normal distribution!

Thanks to Wolfgang Christian for this wonderful digital rendering of The Galton Board.

Unlike unity and the dark, undifferentiated big block, we have now added light by drawing a single path through the big block. The average is like the view of a forest as a single entity or path - even though - we know that it is the diversity of trees found in the forest that makes the forest interesting. Perhaps, the fact that the forest's color is usually green or the path through time changes color with the seasons - the average - is interesting to some. The forest’s average does help us understand its dominant attractor. The forest's dominant attractor, as with most natural systems, is gravity. But it has other environmental attractors, like temperature, sunlight availability, wind, and soil quality. For example, the degree to which there was past volcanic activity will greatly impact the quality of the soil available to the forest’s inhabitants.

However, it is the trees within the forest that possess a diverse array of characteristics—such as colors, leaf shapes, height, width, and the spectrum between evergreen and deciduous foliage—that are even more interesting. Each species and individual plant develops a nuanced relationship with its environment. Charles Darwin, natural selection, genetic mutation, and epigenetics help us understand the great adaptability and resulting diversity of life. While genetic mutations alter DNA sequences over generations, epigenetics refers to changes in gene expression triggered by environmental factors—without altering the underlying DNA. These epigenetic mechanisms allow organisms to adapt more rapidly to their surroundings, complementing the slower process of natural selection.

Next, we begin our exploration of the diverse trees found in the forest.

4. Diversity by Degree

2nd moment: the variance

The second moment helps us understand the distribution’s diversity. The second moment describes the variance of that distribution. That is, how the observations of a distribution differ from its mean. A high variance indicates the population is more diverse than a low variance. In our big block, multiple paths may be drawn through the big block. (Y2) These are the white, dotted risk lines representing probabilistic outcomes. In our forest and trees example, this is where the tree species vary in predictable ways from the forest average.

Y2 Risk Paths

Also, the variance of the population may lead to insights about more uniform subsegments within that population. Returning to our forest and trees metaphor—a high characteristic variance in the overall forest population may prompt us to segment by species. Analyzing individual species often reveals lower within-species variance compared to the total forest. The variance of each sub-population (e.g., oak, maple, cedar) contributes to the overall forest variance, weighted by the relative frequency of each species. These sub-populations also allow us to infer the probabilities and entropy associated with each species—how predictable or uncertain each tree type is relative to the broader forest. A highly variable forest will include very different outcomes. Some species are fragile and less likely to survive, while others are more resilient. This relates to low and high entropy. However, the forest's resilience is generally strengthened with species diversity. While environmental changes may kill off a particular species, diversity makes the forest more resilient to withstand many environmental changes.

This same logic applies to stock and fund investing. The volatility (i.e., variance) of individual stocks within a mutual fund aggregates to determine the fund’s total variance. However, diversification across different stocks reduces the fund’s overall entropy—the level of unpredictability in performance—compared to its most volatile components. Just as a forest composed of many distinct but internally stable species has lower entropy than one dominated by a chaotic mix, a mutual fund’s structure lowers the risk of extreme, unpredictable outcomes. An individual company can experience collapse or meteoric growth, but a well-designed fund absorbs these events, producing a lower-entropy, lower-variance system that provides both upside returns and downside failure protection.

In the same way tree species help you understand the composition and resilience of a forest, fund types help you understand the makeup of investment strategies. A high-growth domestic equity fund is like one fast-growing, sun-seeking tree species, while a stable, dividend-producing utility fund is like another slower-growing, shade-tolerant species. Each contributes differently to the overall ecosystem, and understanding these "species" of funds allows you to build a more robust and adaptable investment forest.

That is why diversification is so important!

In the earlier Galton Board example, a normal distribution was demonstrated with a mean, median, and mode of 0. There were an almost equal number of observations above 0 as below 0. However, while many balls landed closer to 0, not all did. In fact, a small number of them fell relatively far from the 0 central point. This variance from the central point occurred because gravity attracted the balls, but there was still a 50% chance the ball would fall away from the center at each peg. As the ball fell away from the center of multiple pegs, the distance from the central point increased. For example, with 10 levels in the board, the probability of a ball falling to the furthest point on either side—by consistently going the same direction—is (0.5)^10, or just 0.098%, less than one in a thousand. While rare, such extreme outcomes are still possible. We will explore the importance of these low-probability, high-impact tail cases further in the Moment 4: Kurtosis section.

Francis Galton’s work was informed by Adolphe Quetelet (1796-1874). He was one of the founders of using statistics in social science and developed what he called “social physics.” Quetelet's social physics was an attempt to quantify the social sciences in the same way that Newton had quantified physics. Quetelet noticed that social statistics, like crime, stature, weight, etc., fit a normal distribution. Quetelet’s work was pure, in that his social observations were generally independent and occurring in relatively unaffected social environments of his day. This was at a time when there were few social programs or interventions causing a less than normal outcome like in the prior retirement example. In this way, the pure environment was more like how gravity impacts atoms and molecules.

However, often gravity is not the only attractor. As we will explore throughout, human affairs - by definition - will have other attractors. Quetelet's and Galton's work is useful as a gravity-initiated baseline, but in today's complex and policy-impacted world, their work is often insufficient to fully understand most situations alone via the data. The degree to which other attractors impact our past reality is the degree to which our past reality differs from the normal distribution expected value and variance.

Another representation of a variance is a standard deviation. The square root transforms the variance into an easier-to-compare standard deviation. In a normal distribution, about 2/3rds (68%) of the distribution can be found one standard deviation above or below the mean. As such, if 1,000 balls were dropped through the Galton Board, approximately 680 would land within one standard deviation of the mean (centered around 0). That means about 320 balls would fall outside this range, with roughly 160 landing to the left and 160 to the right of one standard deviation.

For some, this may be the only moment to help them understand the world’s diversity. This is unfortunate. The variance alone only suggests a high-level degree of diversity. However, variance does not help describe the essential manner of a population's diversity. That is, the "why" behind the degree to which a variance is higher or lower. In fact, with variance alone, one could conclude the manner of diversity is consistent and well-behaved. As we will discuss next, many systems do not lend themselves to this simplified conclusion.

Well-behaved systems are better described by variance: Sometimes, natural systems are reasonably well-behaved. Think of convection currents available in day-to-day life - such as stirring cream in coffee without a stirrer or defrosting dinner on the kitchen counter. These natural and calm systems are well-behaved. The variance of cream molecules or heat electrons does behave in standard or "normal" ways. Of course, if you stir your coffee with a spoon or microwave your frozen dinner - a human intervention - the excited electrons and molecules will act in less-than-normal ways.

Not well-behaved systems are fooled by variance: Variance, as a descriptive statistic, can be calculated for any sample. The difficulty lies in comparison. Variance observed in calm, stable environments of the past cannot be meaningfully compared to variance in today’s chaotic markets.

Human systems are rarely well-behaved, and variance often misleads. Consider a stock market jolted by a news event. Trading volume surges, energy enters the system, and price distributions deviate sharply from normality. In shorter timeframes especially, stock prices display extreme volatility and directional persistence. Variance from calmer times, because it captures magnitude but not the character of diversity, can therefore give a deceptive picture of risk.

Many are prone to stopping their past reality investigation at the second moment. Unfortunately, doing so forces us to make inappropriate simplifying assumptions about an extraordinarily complex world. Understanding dynamic stock market systems, like other dynamic systems, require deeper statistical moment investigation than stable coffee stirring systems. In today's world, well-intended social policy creates new attractors diverging from gravity or the well-behaved "pure" environment. It is the third and fourth moment that opens the door to a world teeming with uncertainty and complexity. This is our Y3 path from the big block.

For an example of managing volatility in the context of building personal wealth, please see the article:

5. The Pull of the Outliers

3rd moment: the skewness

The third moment is called skewness. It mathematically quantifies inertia by measuring the asymmetry of a distribution, capturing how extreme values (outliers) influence the central tendency and create resistance to change. For example, in a positively skewed distribution, the long tail of higher values creates a directional pull that shifts the mean upward, reflecting inertia against reverting to symmetry. This property makes skewness a valuable tool for analyzing the dynamics of diverse systems where extreme values drive collective behavior.

A helpful, minimally skewed baseline example is the Galton Board—a device where balls fall independently through a series of pegs and form a bell curve. This represents a stable, natural system. Because the only attractor is gravity, and the balls act independently, the resulting distribution is symmetric with no skewness or inertia.

Now imagine a fictional twist: each ball is lightly magnetized. If one ball drifts to the left, it slightly increases the chance that the next ball will be pulled in the same direction. We have moved from a single attractor (gravity) to two attractors (gravity plus light magnetization). Over time, this interdependence introduces skewness into the distribution.

While inanimate objects rarely interact this way, people almost always do. We are social creatures, and our choices influence one another. In domains like stock investing, this magnetic pull shows up as herding behavior, where social influence drives outcomes away from symmetry and toward asymmetry.

In the normal distribution introduced earlier, independence ensures no inertia. Each observation is uninfluenced by the others, so no cumulative momentum or directional bias arises. But inertia is a regular feature of the diverse, interdependent distributions found in human affairs. Skewness captures how momentum among observations creates inertia, pulling systems away from equilibrium and symmetry.

By the way, momentum and inertia are related but serve distinct roles in both physics and human behavior. Momentum is the tendency of a body in motion to stay in motion, influenced by both speed and direction. In statistics, and particularly in the context of skewness, momentum reflects how outliers or extreme values introduce directional pressure—pushing the center of the distribution away from the mean. In financial markets, for example, momentum from repeated behaviors like herding amplifies trends, creating skewed outcomes and widening dispersion.

Inertia, by contrast, is the resistance to any change in state, whether that means starting, stopping, or altering direction. In human behavior, inertia shows up as the reluctance to shift habits or beliefs—even when new evidence or direction is warranted.

In human affairs, individuals have different kinds of attractors. In our day-to-day lives, it is the legal environment, culture, and our social connections creating a behavioral momentum that guides us toward varying outcomes. The social attractor is a powerful alternative attractor that may inappropriately influence our decisions. It does not mean those outcomes are guaranteed, but those environmental forces relentlessly shape our trajectories. This is like the inevitability conjured by the aphorism: "Water finds its own level."

In terms of the important decisions of our lives:

a) people are strong social creatures and tend to herd together when making decisions, and

b) most significant decisions have uncertainty.

The power of our social nature should not be underestimated as an inertia-generating force. Sociologist Brooke Harrington said that if there was an E=MC^2 [energy equals mass times the square of the speed of light, Einstein's Equation] of Social Science, it would be that the fear of social death is greater than the fear of physical death. [vii]

This means if your social reputation is on the line (a) and you have financial decisions laced with uncertainty (b), you are more likely to follow social cues – even if those social cues lead to a worse outcome for you. An example of the “social death > physical death” fear phenomenon is our nature to sell investments into falling stock markets. This behavior contributes to measurable skewness in stock market data by creating asymmetry in price movements. When large groups of investors sell in response to falling markets, they amplify downward momentum, shifting the distribution of returns and causing a longer tail on the negative side. This herding behavior reinforces the skewness, making market recovery slower and more challenging. Even though, financial theory and centuries of experience tell us buying diversified investments in falling markets is the best approach to maximizing wealth.

So, the gap created by an individual's uncertainty is often filled by our social nature to follow others. As a result, an individual's observations can attract followers. Herding behavior generates momentum—a self-reinforcing directional trend in decisions or asset prices. Over time, momentum builds into inertia, as people continue in the same behavioral pattern, resisting the effort required to re-evaluate or change course.

Skewness does not measure inertia or momentum directly, but it reflects their impact on a distribution. For example, stock prices often exhibit skewness when momentum drives excessive buying or selling. In recessionary environments, negative momentum builds as prices fall and people follow others by selling—reinforcing the downward trend.

If people were “normal”—meaning we acted like the independent, random balls on a Galton Board—then inertia would not persist, and prices would fluctuate symmetrically around a stable mean. But people are not normal! At least, not from a statistical standpoint.

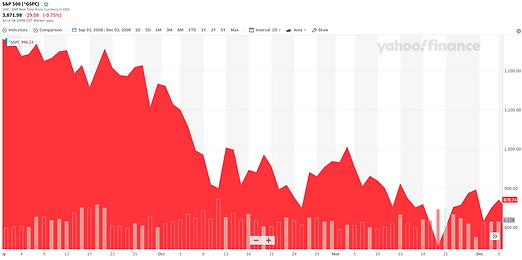

The chart below illustrates this dynamic during the 2008 Financial Crisis, where herding behavior amplified negative momentum. Rather than stabilizing, prices continued to fall—showing how inertia can drive markets far from equilibrium.

The S&P 500 from September 1 to December 1, 2008. This demonstrates an almost 40% drop during the "dark days" of the Financial Crisis.

Financial experts know that inertia can be destructive to an individual’s wealth. Objectively, a wealth-generating habit is to "buy low and sell high." However, our nature often pushes us to "buy high and sell low," especially in chaotic, sell-off markets where fear and herding behaviors dominate. This wealth-preventing perversion is part and parcel of social inertia reflected in the skewness of the 3rd moment. While skewness does not directly measure inertia, it captures the asymmetry in outcomes that such persistent behaviors produce.

These inertial patterns persist because our emotional instincts—especially fear and loss aversion—often overrule objective analysis. Arguably, the single most important contribution of behavioral economists is the commitment device. This tool helps individuals override their human tendencies when momentum-driven skewness leads to inertia and poor financial decisions. For example, an automatic investment plan that continues to invest even during market downturns is a commitment device in action. It enables dollar cost averaging—a proven strategy—by removing the emotional resistance people face when manually deciding to invest in a falling market. Commitment device to the rescue!

In expanding economic environments, the opposite occurs. Stock prices tend to gain upward momentum as people follow the persistent buying of others with buying of their own. Over time, this behavior becomes entrenched—a form of inertia—where individuals continue to buy simply because others are buying, not because of underlying fundamentals.

The chart below shows the S&P 500’s performance over the decade following the 2008 crisis—nearly a fourfold gain. Investors who summoned the courage to stick with their plan and resist panic during the market’s free fall were rewarded with massive long-term gains. This sustained rise reflects how positive momentum, reinforced by herding behavior, can drive markets upward. Inertia takes hold as investors keep buying, often powered more by crowd psychology than by fundamentals.